|

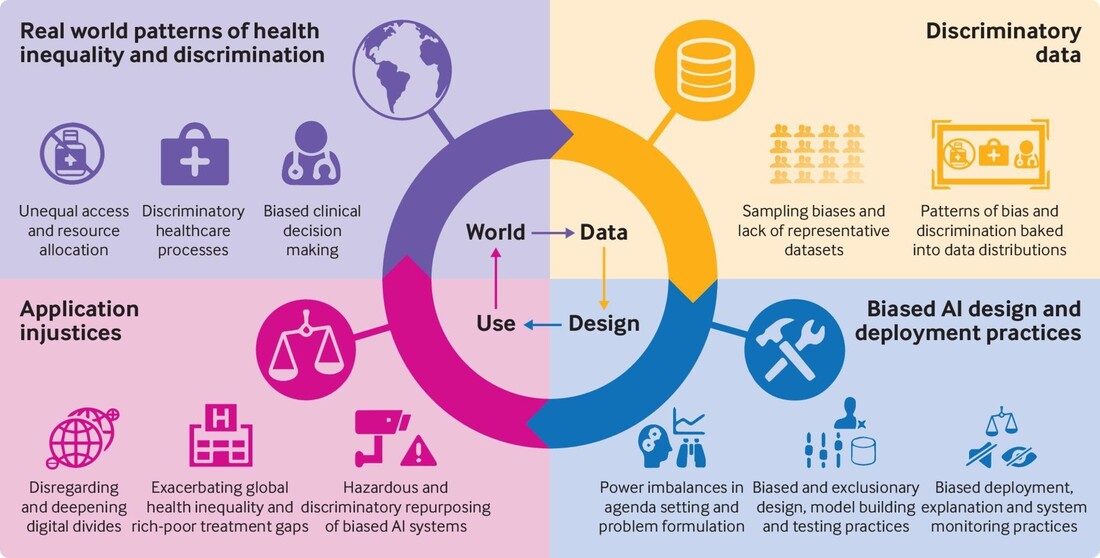

Ethics is the responsibility to act morally. Many industries have stringent regulations governing their products. Human subjects cannot be used in mechanical industry experiments because they can die. To limit emissions, the auto sector is subject to strict rules. However, there are no equivalent regulations governing the social consequences of AI. Many industries, from healthcare to law to self-driving cars, now employ AI to make vital decisions affecting actual people's lives. Can decision-makers comprehend decisions made by AI? While working on Alzheimer's disease study, I realized that gene data is overwhelmingly biased in favor of European descent. Darker skin tones were not detected by the skin cancer detection algorithm trained on those data. How did it go so long unnoticed? Narrow artificial intelligence could have long-lasting effects on civilization. Unwanted bias in data is transmitted to models; models trained on biased data are more likely to be prejudiced. Limited information combined with a partial model yields biased output, which is then used to retrain the models and make the bias worse. Fair data and algorithms are crucial components of artificial intelligence. However, the truth is very different. Data contains human bias: A source of information that is sometimes false is where reporting bias originates. People often conceal information or distort the truth. When real random selection is not used, selection bias is introduced. Data bias stems from overgeneralization, implicit prejudice, and stereotypes. Examples include "teaching" algorithms to constantly associate women with the kitchen by having more images of women cooking in the training dataset, which would result in less preference for other activities. When improper data samples are chosen, sampling bias is introduced. People of color, for instance, are difficult to identify in image samples that could be used to train computer vision algorithms. Image Credit: British Medical Journal Confirmation bias is introduced by the tendency of developers to confirm their preconceptions and ignore new information. Cognition blind spot resulting in the biased model. For example, using attractive models to train algorithms for marketing information. Instagram's weight-centric algorithm was harming the mental health of teenage girls. The overconfidence of developers in believing what they see is true may result in biased models. There is an urgent need to address the ethics in the field of Artificial intelligence. FDA has strict requirements for food labeling and nutrition. I wish we had such regulations for the harmful effects of machine learning algorithms. The Department of Transportation requires an annual inspection of every vehicle. Why can't we have software companies undergo yearly certification of their algorithm? We should aim to build a Deliberate, Actionable, Scalable AI Governance framework. A framework that enforces accountability, objectivity, and transparency during the life of an algorithm. Many big companies have a model monitoring framework that tracks model explainability, bias, and fairness. But there are no common overarching governing principles that define the best practices for AI governance. There is a strong need for a central fairness tool that monitors algorithmic bias. A device that measures the severity of risk introduced by the model by testing it for impact on society. An evaluation tool that ensures the model does not violate fundamental human rights. The accuracy of the model changes over time. As we know, Covid changed everything upside down. Who is evaluating to ensure critical decision-making models from the pre-covid era are functioning correctly? We need to have regulations on companies to prove that models are still accurate after the fundamental shift in the data across the board. We need core guiding principles that protect the differential privacy of data, accurately measure drift in data and model, check the model's accuracy over time, and recommend expiry dates on outdated algorithms. As we grow into the age of artificial intelligence, we want to ensure everyone has access to ground truth. For example, if I am rejected for a loan, how do I know there was no discrimination? How do I know if the model was trained to be fair to all age groups, all races, and all genders equally? It is also essential to train the people who are building artificial intelligence. A few years ago, Amazon created a hiring tool biased toward male candidates. Algorithms learn from patterns in data. Women's representation in technology is far less compared to males. If such data is used to train hiring algorithms, it will be biased toward men. Perhaps making feminism theory courses mandatory for computer science graduates would avoid such a situation. Facebook algorithms failed to detect posts spreading misinformation that endangered public safety. Tesla's self-driving cars are forced to make tough decisions. People writing these algorithms would benefit from training in moral philosophy and core ethical principles.

References:

Comments are closed.

|

Page HitsAuthorArchita Archives

January 2023

Categories |

RSS Feed

RSS Feed